While AI will never replace human creativity, it can certainly help with productivity. Today I’m sharing my Voice Analyser MCP – an experimental tone of voice guideline generator that runs from a website’s XML sitemap.

The point of this project was to understand how AI communicates and to learn more about the detectable patterns it uses when generating a response.

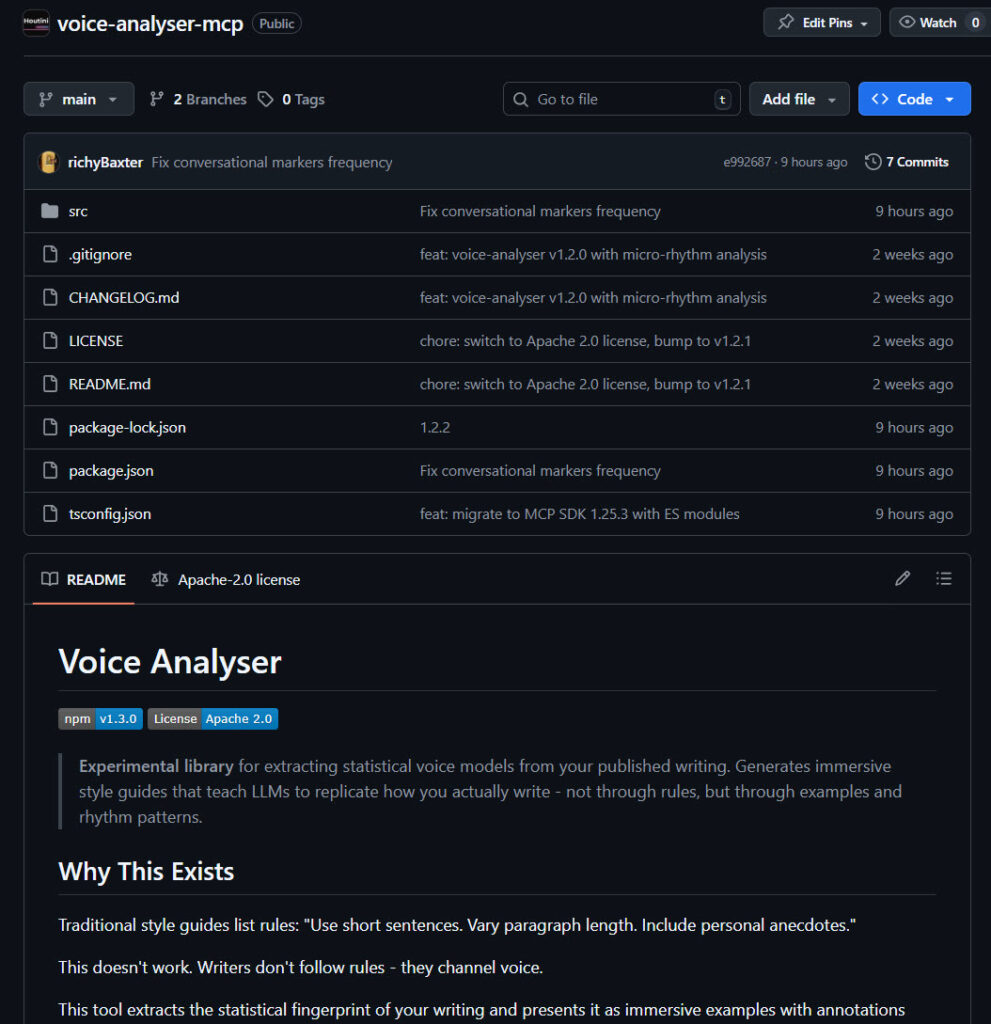

Voice Analyser is built on on the latest version of the Model Context Protocol specification. It exposes three tools through stdio transport: corpus collection from XML sitemaps, linguistic analysis, and style guide generation. When you trigger the MCP, the initial collection phase uses Cheerio to scrape article content from each URL in a sitemap file. It strips out navigation artifacts, converts the HTML to clean markdown, then stores everything locally with metadata.

Featuring proper rate limiting with delays between requests and handles sitemap XML parsing through fast-xml-parser, it seems quite reliable at extraction. All the data is saved as a corpus in the working directory you specifiy.

Processing: the MCP runs 14 separate statistical engines across your corpus before generating the output. If you’d like to review the methodology, take a look at the Github repo.

Get practical AI tools and workflows delivered weekly.

No spam. Unsubscribe anytime.

The Caveat

Now I’m no advocate of using AI generated content for search, *but* there are a plethora of use cases that a tool like this can help you with for automation, AI generated snippets (like a summary feature, for example…)

My favourite example is internal company documentation. You’re building an agent that can generate financial reports. But the commentary sounds like ChatGPT. People can detect that and it sounds terrible.

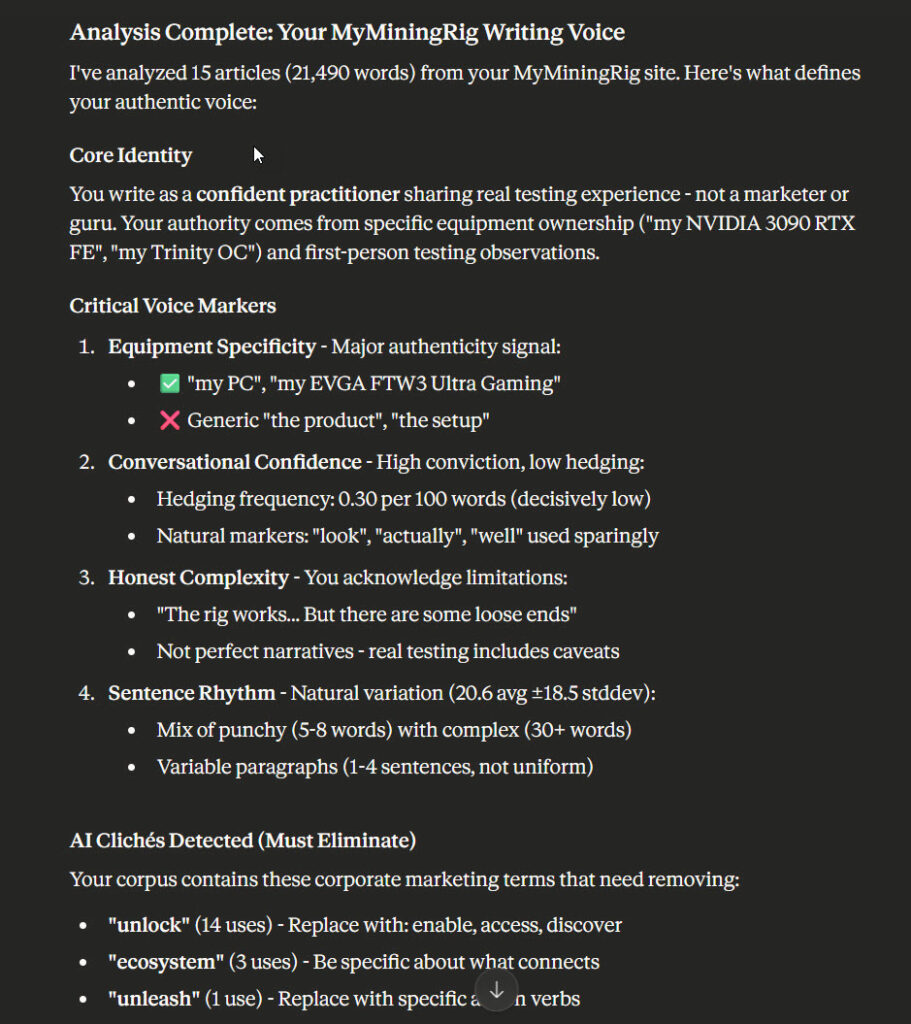

If you have a corpus of work on the internet that you can say “I like this tone of voice, how does it work?” then the voice analysis in this MCP can analyse that corpus by fetching and saving your content on your machine. From this, it’ll generate a tone of voice guide for you to include in your agent prompts during the content generation process.

How to Install

If you’re familar with MCP servers, then all you’ll need is this code snippet below to run with NPX or clone the Github repo here.

Add this to your mcp config.json:

{

"mcpServers": {

"voice-analysis": {

"command": "npx",

"args": ["-y", "@houtini/voice-analyser@latest"]

}

}

}And restart your AI assistant!

If you’re not sure what to do, then read this article explaining how to install an MCP server, and make sure you have Desktop Commander for file IO.

Use Example

As I mentioned earlier, for written report commentaries and generally boilerplate work, the style guide output should do a a good job of mimicking your tone of voice. It isn’t perfect and the copy will need a review to tweak, but it’s quite convinncing out of the box. The output can’t replace original, human prose but for boilerplate work, email, and AI asssited story telling, AI summaries – I think you’ll like it.

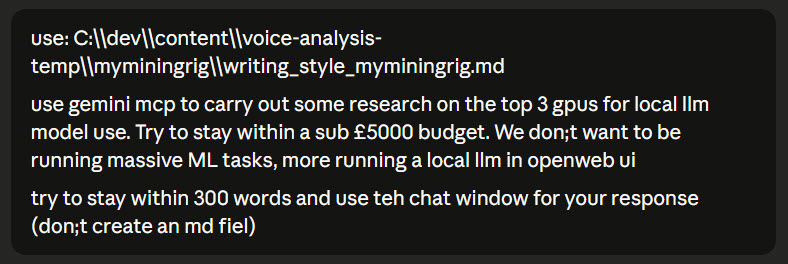

Prompt: (sorry for the typos)

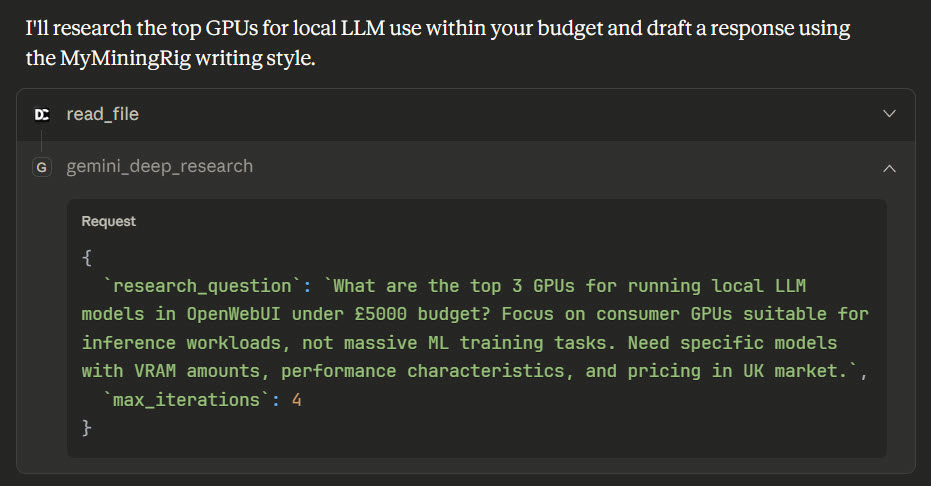

Working:

The output is reasonably compelling; and sounds like me. My writing style, which I’m not always proud of, contains vagueness here and there (the sort of thing my AI SEO analyser likes to weed out).

However, aside from the “Here’s the thing, though” which needs some though, it tracks:

The NVIDIA RTX 4090 sits at the top. It’s got 24GB of GDDR6X VRAM and frankly, it’s the fastest consumer card you can buy for this work. New units run about £1,800-£2,000. You’re looking at roughly 128 tokens per second on 8B models, which is excellent. It handles large quantised models without fuss, and NVIDIA’s CUDA support makes everything simpler from a software perspective.

The RTX 3090 deserves serious consideration. Same 24GB of VRAM, previous generation Ampere architecture. Still very capable – about 112 tokens per second on those same 8B models. The interesting bit here is the used market. You can pick these up for £800-£900 used, which is half what they cost new. That’s significant when you’re trying to maximise VRAM capacity.

It’s not quite powerful enough to fool AI detection, but that wasn’t the point of the exercise. Give it a test and see how much like you it can sound!