Aside from using Claude to Code, I use Claude Desktop for various aspects of my working day. From research, data analysis and writing. It’s really powerful, reliable when used in the way it was intended, and, as a productivity tool, it’s astonishing.

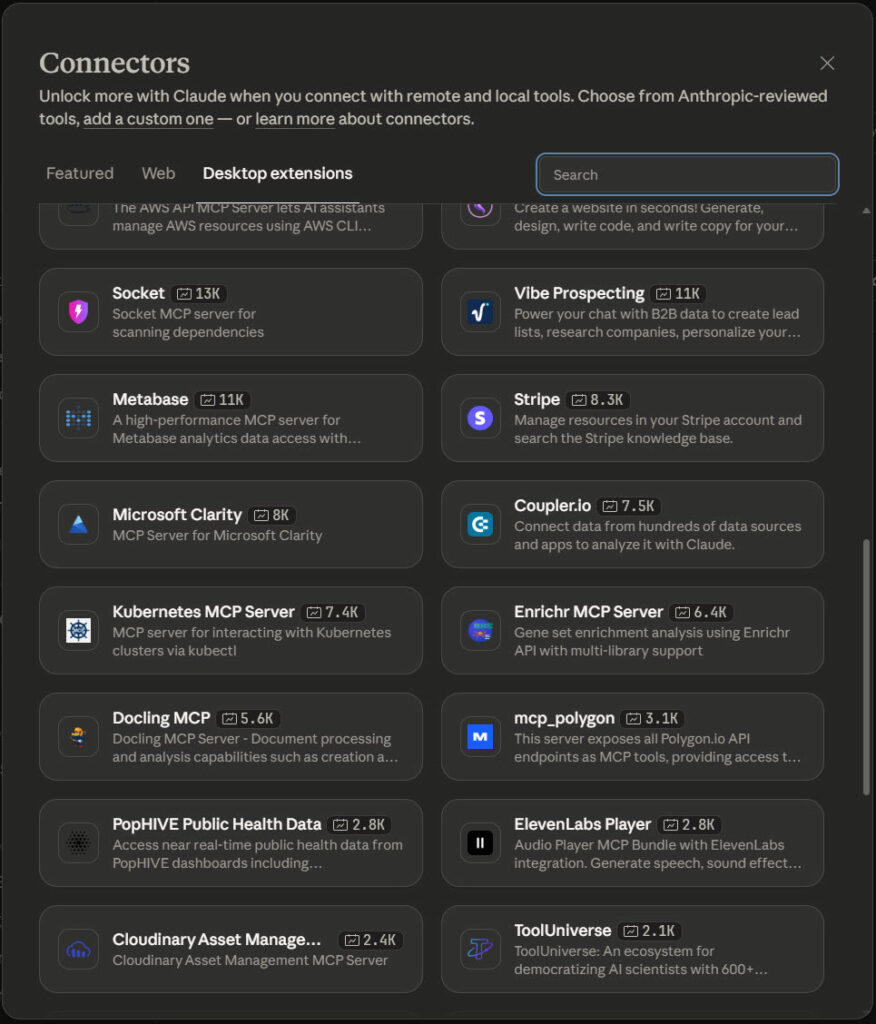

While Claude is brilliant, it’s the extensions, the MCP servers, that really make it powerful. MCPs (or extensions if you use the Claude Extensions library), allow you to connect Claude to the outside world. Think: writing files, performing searches, fetching data – that type of thing.

First, some housekeeping, if you’re new to MCP tools used in an AI assistant like Claude Desktop, I’ve written a starter guide to Claude Desktop here. Hopefully, this should guide you through a configuration path that teaches you everything you need to know.

If you’ve only used the web version of Claude and you wonder what all the fuss is about, let me introduce you to the world of MCP connections:

Getting Started

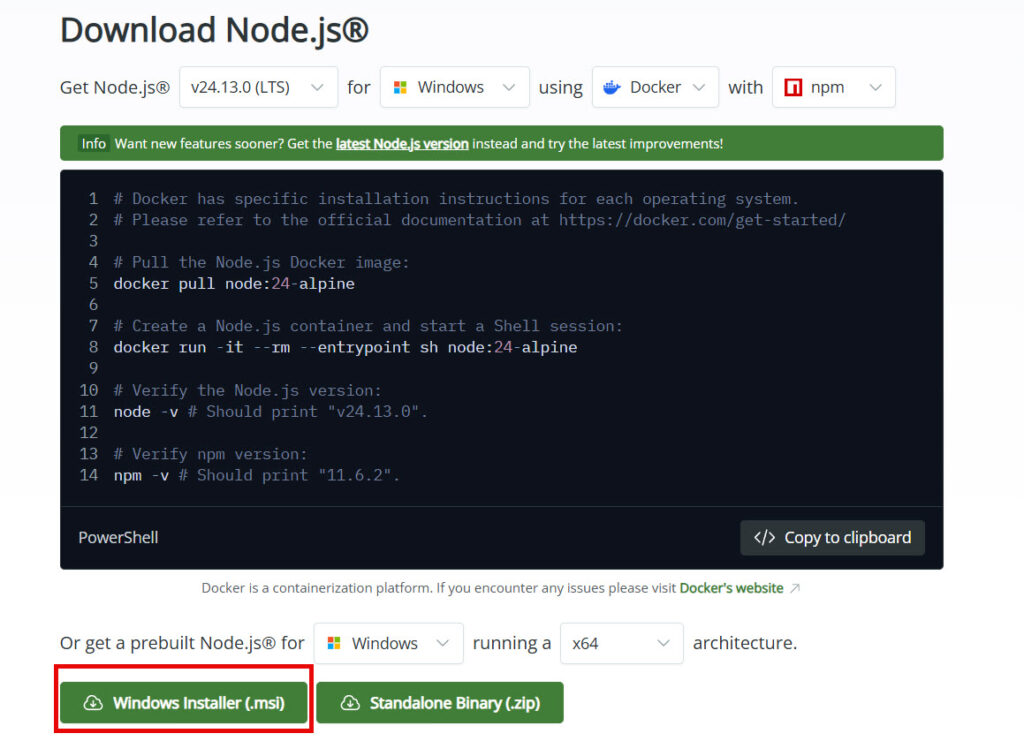

If you’re starting out for the first time, all you need to do is install Node, as I’ve selected extensions available either via the extensions library in Claude Desktop of via NPX (the Node package execution function).

All you have to do is install Node:

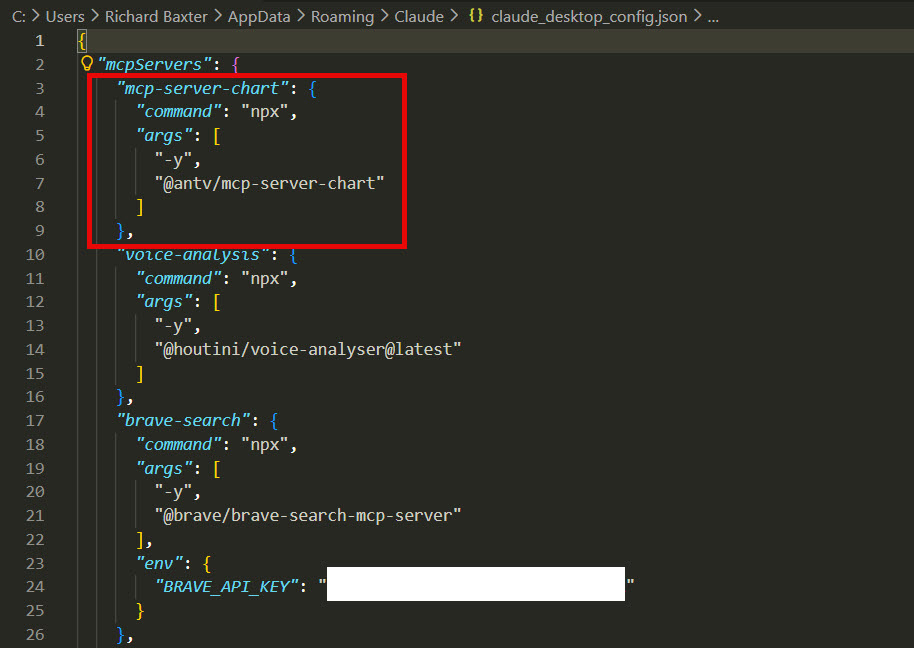

You can add or edit mcp servers from your claude_config.json, which can be found at this path if you’re using Windows:

C:\Users\[your username]\AppData\Roaming\Claude\claude_desktop_config.jsonThe file is JSON, and it looks like this:

In no particular order, here are the “best” MCPs that I use to do my job (I use different MCPs for Claude Code, which we’ll look at in another article).

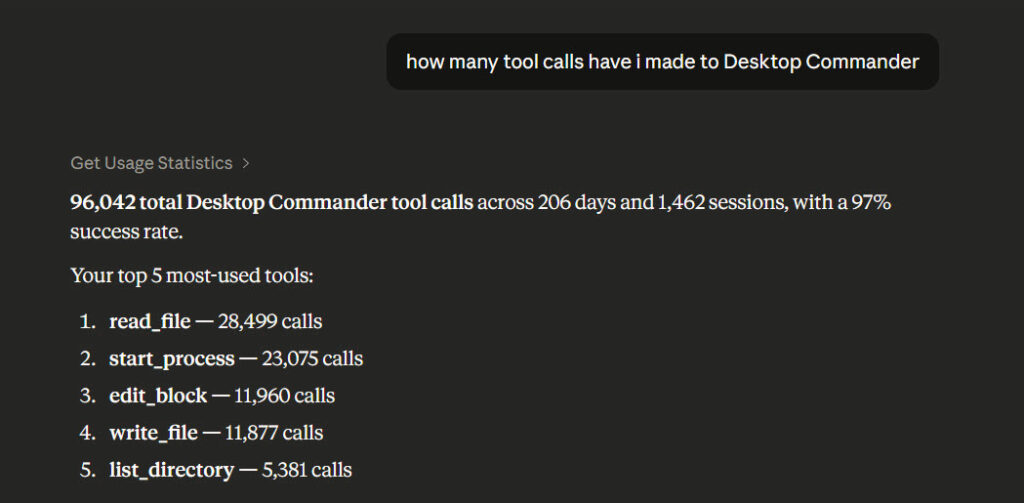

Desktop Commander

Without Desktop Commander, Claude has no real interaction capability with files or processes outside of its own software.

Desktop Commander allows Claude to read and write files, run terminal commands, manage various processes (such as a Nodejs execution), and, you can search for files through your filesystem. While that sounds basic at first, it’s incredibly powerful. Without it, Claude Desktop is simply a chatbot. You might as well stay on the web version. With Desktop Commander installed, Claude becomes something closer to a proper development assistant that can actually touch your files.

If you’re thinking, “wait, I shouldn’t give Claude the ability to create a file!”, relax. Desktop Commander is very, very well tested. You can ringfence the file access to a specific directory by setting a working directory in your prompt.

One thing I think is important: Claude Desktop ships with its own “Code Execution” feature built in. Turn it off. Desktop Commander does the same job but much better, and critically, you get to specify which directory Claude uses in a prompt. Claude’s built-in feature runs in a sandboxed environment that is quite restrictive, and worse, Claude basically loses entire files.

{

"mcpServers": {

"desktop-commander": {

"command": "npx",

"args": ["-y", "@wonderwhy-er/desktop-commander"]

}

}

}Firecrawl

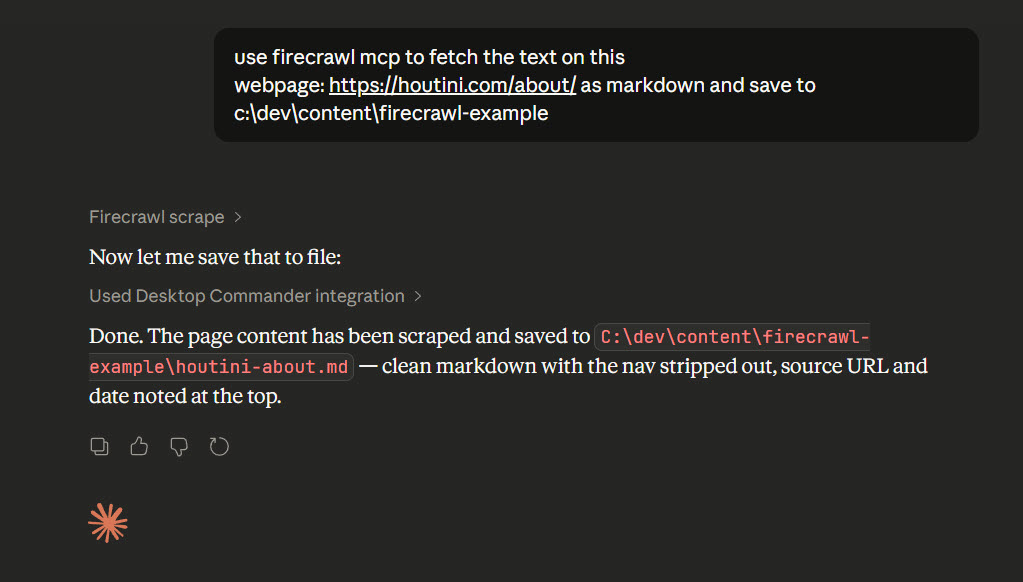

Firecrawl’s service is part scraper, part AI-powered, ultra configurable retriever of structured data. It’s my go-to data scraper; I use it both as an MCP, in N8N and as the supporting act in the Cloudflare infrastructure I use to keep fresh AI jobs piped through to this very website.

The tool has six main capabilities: scrape (single page), crawl (follow links across a site), map (discover all URLs), search (web search with optional scraping), extract (structured data via LLM), and agent (autonomous browsing). In the MCP context, I mostly use /Scrape

There’s a great deal more to Firecrawl than just scraping, and I will be demonstrating more use cases soon. For now, I explain here how my job content scraper works, which uses Firecrawl as a JS friendly and very versatile service.

You’ll need an API key from Firecrawl, then set up the MCP as follows:

{

"mcpServers": {

"firecrawl-mcp": {

"command": "npx",

"args": ["-y", "firecrawl-mcp"],

"env": {

"FIRECRAWL_API_KEY": "your-api-key"

}

}

}

}Supadata

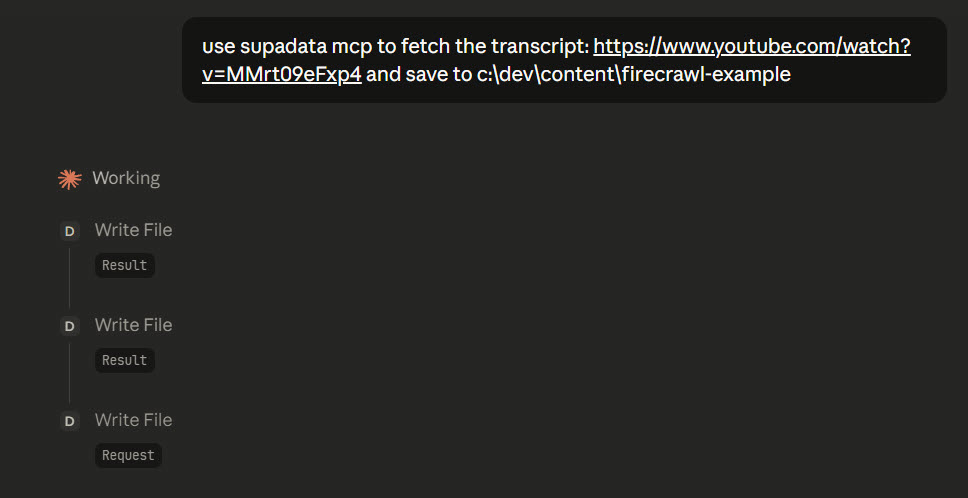

Supadata sits as a core component for my content research and data collection work. Rather than having to watch an entire YouTube video, I can grab the transcript with the MCP, then filter out the key research points I need. It supports YouTube, TikTok, Instagram, Twitter (X), and direct file URLs for transcript extraction. This superpower alone makes it worth installing.

Supadata’s transcript extraction pulls actual captions and subtitles from videos across YouTube, TikTok, Instagram, and Twitter (X). Beyond transcripts, it also offers site scraping and media metadata extraction.

My typical workflow: find a relevant video, pull the transcript with Supadata, then ask Claude to extract the specific technical details I’m researching. It’s particularly good for staying current with rapidly evolving, high-technology topics, science talks, news, product announcements, expert interviews and so on. It’s data for data journalism.

Beyond transcripts, Supadata also offers site scraping. It’s much simpler than Firecrawl (for example, it only outputs markdown and has no JS site support, but it’s reliable.

{

"mcpServers": {

"supadata": {

"command": "npx",

"args": ["-y", "@supadata/mcp"],

"env": {

"SUPADATA_API_KEY": "your-api-key"

}

}

}

}Brave Search

Claude Desktop already has built-in web search. So why add Brave Search on top?

It’s specialisation and diversity. Claude’s has a search websearch which is is good for general queries, but Brave Search gives you dedicated endpoints for news, images, videos, and local businesses. If I’m researching what’s been published about a topic in the last 24 hours, the news search with freshness: "pd" is far more targeted than a general web search.

Here’s the MCP package:

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-brave-search"],

"env": {

"BRAVE_API_KEY": "your-api-key-here"

}

}

}

}You’ll need a Brave Search API key – there’s a free tier that covers casual use.

Context7

Context7 solves a niche but important problem: Claude’s training data has a cutoff, so if you’re doing research on an app PRD (writing specs, requirements, planning implementation), then Context7, used up front, can save hours of headaches.

I use Context7 for this type of exploration: “Use Context7 to find the latest libraries for this application and consider if the changes may be breaking.” From there, Claude will know if the packages it *thinks* it should be working with are actually current. The yrarely are, several months knowledge cutoff is an age in development.

{

"mcpServers": {

"context7": {

"command": "npx",

"args": ["-y", "@upstash/context7-mcp"]

}

}

}Chrome DevTools

Chrome DevTools MCP gives Claude direct control over a Chrome browser. Write a prompt to ask it to click elements, fill forms, take screenshots, check console for JavaScript errors, navigate pages, run JavaScript, monitor network requests, and run performance traces. It’s browser automation through conversation, but the data Claude receives is an excellent debugging tool.

It can chew through the context window so, it pays to be very specific with your prompt.

How to install:

{

"mcpServers": {

"chrome-devtools": {

"command": "npx",

"args": ["-y", "chrome-devtools-mcp@latest"]

}

}

}Note: this is Google’s official Chrome DevTools MCP, not a third-party wrapper. It collects usage statistics by default – add "--no-usage-statistics" to the args if you’d rather opt out.

Here’s more on the Chrome Dev Tools MCP.

Google Knowledge Graph

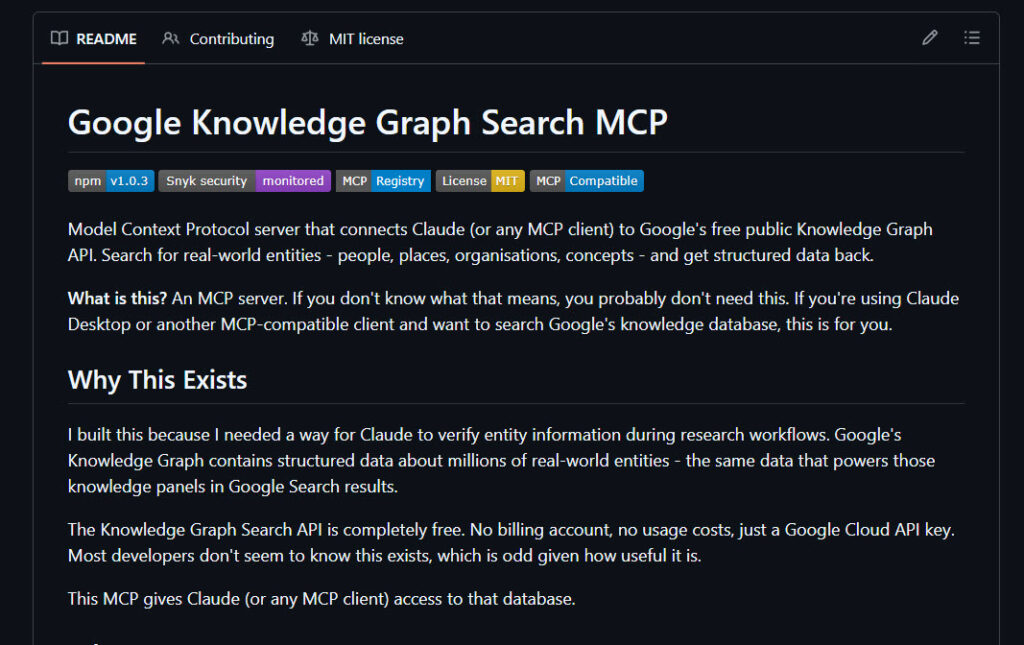

The Knowledge Graph MCP is probably the most niche tool on this list, but it fills a specific gap well. It gives Claude access to Google’s structured entity database – the same data that powers those info panels you see on the right side of Google search results.

I built this because I needed a way for Claude to verify entity information during research workflows. Google’s Knowledge Graph contains structured data about millions (billions?) of real-world entities – the same data that powers those knowledge panels in Google Search results.

The Knowledge Graph Search API has a generous free tier with 100,000 read calls per day per project. You just need to set up a Google Cloud API key. Most developers don’t seem to know this exists, which is odd given how useful it is.

This MCP gives Claude (or any MCP client) access to that database.

{

"mcpServers": {

"google-knowledge-graph": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-google-knowledge-graph"],

"env": {

"GOOGLE_KNOWLEDGE_GRAPH_API_KEY": "your-api-key"

}

}

}

}

Gemini MCP

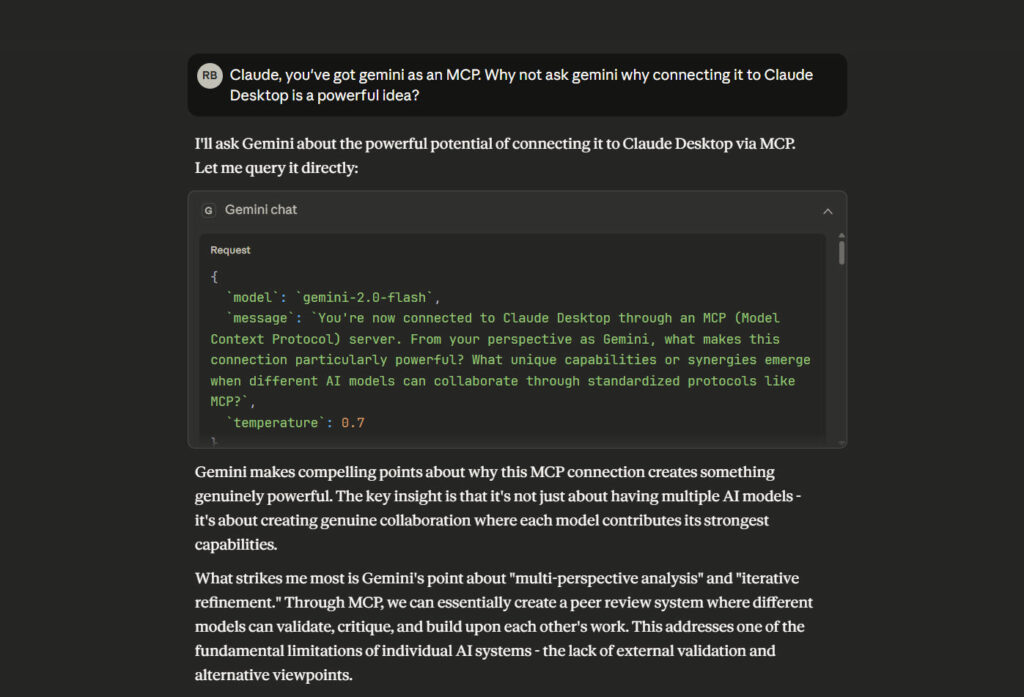

I think I’m saving the best till last here – my very own Gemini MCP. I really don’t think people realise yet, just how powerful it is for Claude to have an MCP connection to grounded Gemini results.

When we (Claude and I) get stuck on a bug, I tell Claude to check with Gemini. It’s so powerful for content research; checking facts and data.

How to Install:

{

"mcpServers": {

"gemini": {

"command": "npx",

"args": ["@houtini/gemini-mcp"],

"env": {

"GEMINI_API_KEY": "your-api-key-here"

}

}

}

}You’ll need to get yourself a Gemini API key, which is easily done via Google AI Studio.